I have server-side code that processes an imported list of several thousand product items.

The process involves creating warehouse locations and then material receipt stock entries for each item.

It skips creating a warehouse location that already exists.

If I execute the code with stock entry creation enabled then it fails after the first successful stock entry, with:

frappe.exceptions.ValidationError:

Warehouse Lethal Widget - LSSA is not linked to any account,

please mention the account in the warehouse record

or set default inventory account in company My Company.

On the other hand …

If, (after reverting the database), I execute the code with stock entry creation disabled then all the required warehouse locations get created correctly.

Then, if I enable stock entry creation and rerun the same script, all the required stock entries get created correctly.

Here’s the code:

def createStockEntryForLocation(item, numbers):

LG("Create {} item stock entry for location :: {}. ({})".format(len(numbers), item.legacy_code, NAME_COMPANY))

whs_name = "{} - {}".format(item.legacy_code, ABBR_COMPANY)

try:

existing_warehouse = frappe.get_doc('Warehouse', whs_name)

LG("Location '{}' already exists".format(existing_warehouse.name))

except:

new_warehouse = frappe.get_doc({

'doctype': 'Warehouse',

'warehouse_name': item.legacy_code,

'parent_warehouse': "{} - {}".format('Illegal Widgets', ABBR_COMPANY),

'company': NAME_COMPANY,

'account': "{} - {}".format('1.1.5.06 - Contraband', ABBR_COMPANY),

'warehouse_type': 'Illicit'

})

new_warehouse.save()

new_warehouse.submit()

aWarehouse = frappe.get_doc('Warehouse', whs_name)

aWarehouse.reload()

LG("Have location. Creating '{}' item Stock Entry for {}".format(numbers, aWarehouse.name))

if True:

createStockEntry(frappe._dict({

'serial_numbers': numbers,

'warehouse_name': aWarehouse.name

}))

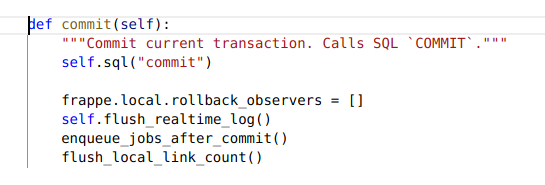

I’m guessing that scheduler tasks need to be run when a new warehouse is created, and so it’s obligatory to delay before creating any dependent artifacts.

Is that how it works?

What’s the best way to work with this?